AI Art Is Cool, And That's OK

Yup, here I am again with another Fifthdread Hot Take. I love AI Art, and I'm tired of pretending that I don't. Turns out, AI has blown up in the past year. From ChatGPT for text based generation, to MidJourney or Stable Diffusion for Generated Art- AI is here, and it's here to stay. We can't put the genie back in the bottle on this one, and frankly, I wouldn't if I could. These AI tools are going to revolutionize the way we do things in the future. Dread it. Run from it. AI arrives all the same. So with that in mind, I'd like to focus on a the art part of it, from the controversy around it, to how I use it as a part of my self-hosted setup.

The Controversy with Artists

I don't want to focus too much time on this, but I figure it's worth an overview for those interested. Many artists have issue with AI Art. To some, it threatens their livelihood. In some ways, that's true, although not entirely. Some also claim that AI Art is plagarism. While that can also sometimes be true in a sense, it's my opinion that it's not plagarism. The art is used to train AI Models, sure, but it's not storing that art. It's learning about the art style in the same ways a human would. It's replicating it in the same ways that I was told to replicate popular art styles during my school Art Class.

Frankly, the plagarism discussion is without merrit. If I replicate an artists art-style myself, there's no issue. If my AI replicates an art style, there is? That doesn't make sense and let me tell you why. If I were to train an AI using my own art, there's no issue, right? It's my art, and I trained an AI using my art. Now, say that I practice a popular artists art style, create several original works in that art style, only to then train my AI based on my personal original works, the end result is the same. I've trained an AI to replicate an art style. Weither I did it with my own personal dataset, or an online one- I've trained it all the same. So at the end of the day, you can't stop it. There's no legal ground to stand on regarding how we train these AI tools. You can slow it down, but you're not going to be able to stop it. So what should artists do?

Embrace AI Art For What It Is: An Awesome Tool

I'm loving using Stable Diffusion using my personal Automatic1111 instance. I've spent hours just generating cool stuff and I've enjoyed it quite a bit. My wife and I will spend time doing cool prompts, showing each other some exciting results. It's been very enjoyable. I've developed a pretty awesome workflow which provides decent high quality results, and I'm pumped about it. I'm so pumped, that I made a new website just to upload and share my AI Art Portfolio. Be warned, there are some NSFW images in there.

Automatic1111 is Amazing

For real. I'm running a few instances on different machines, and I have to say- wow. While it has a few bugs, it's completely usable and has support for a few addons which are quite nice. I'm running my main instance on a server with a GTX 3090, using GPU Passthrough to my Docker containers. You might think that a 3090 is overkill for Stable Diffusion, but I assure you that it wasn't such as bad idea. Not only does it improve the speed at which it can generate art, it also uses a ton of vram. When I say a ton, I mean a lot. High-resolution images eat up so much vram, that the 3090's 24GB gets eaten alive during large renders. Before the 3090, I was limited on how large I could make things without running into vram limitations. Insane, but with how much I enjoy using Stable Diffusion, I decided the 3090 wasn't such a bad play, considering a friend was willing to sell it to me for a great price.

The "God Tier" Workflow

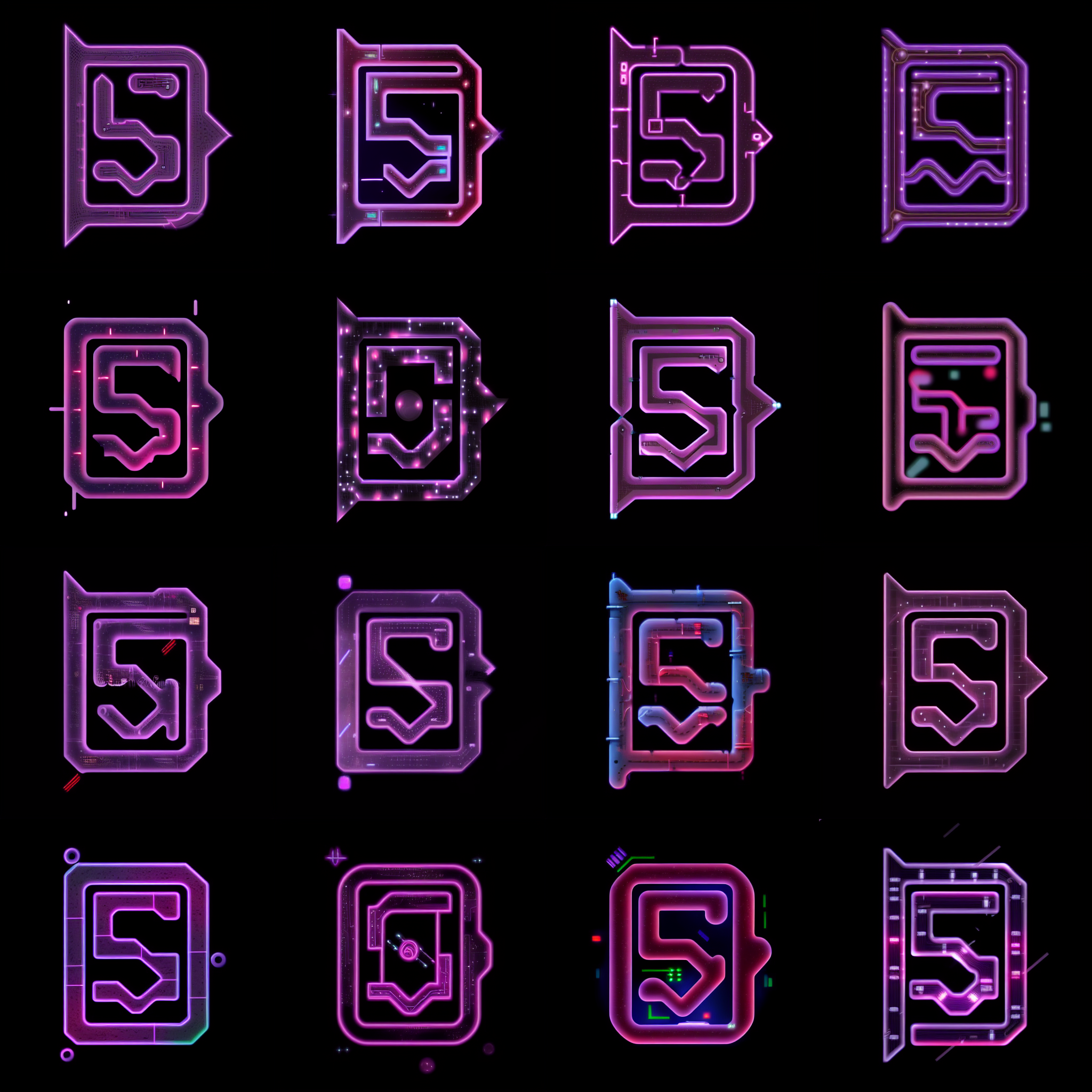

So you want to make something that looks amazing... Well, you've probably come to the wrong place, but I'll give you what I do to make a decent result. First, it starts with picking the Model. I've merged a few models myself, so I use three different ones depending on what look I'm going for. I have Fifthdread Anime Mix, Art Mix, and Real Mix- each with a different overall "look".

Next is setting a few parameters. How large, etc. I'll set the width, height to something like 512, 832. Sample Steps 35. Sample Method = DPM++ 2M Karras. CFG Scale 7.

Then I'll work on the prompt. You want to mix both positive and negative prompts. There are great examples online, but here's an exaxmple of something I've used in the past.

Once I have a result, I save it off, and convert it to a JPEG. I sometimes have issues using PNG files for the next step, so that's why I do that. It may not be necessary for some.

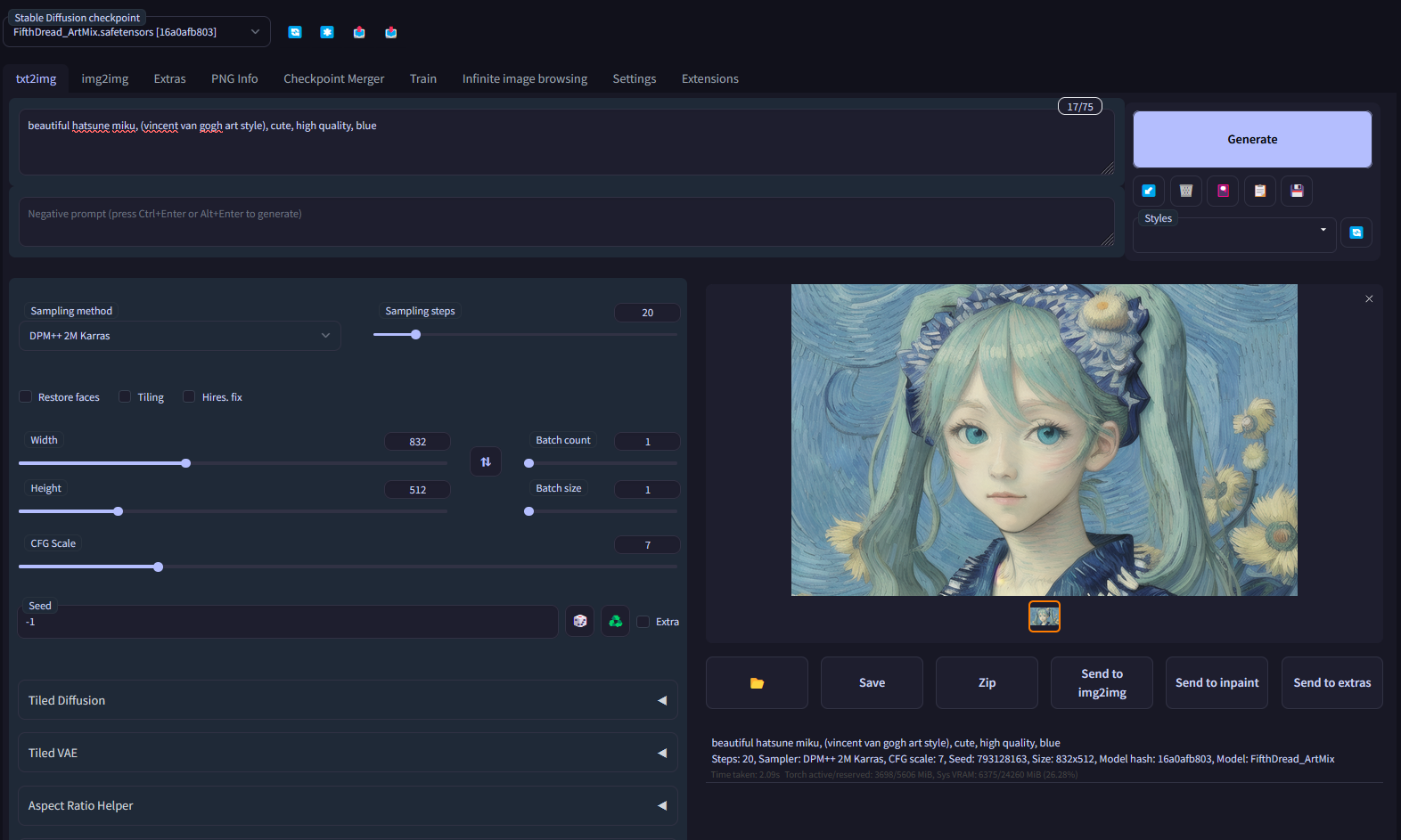

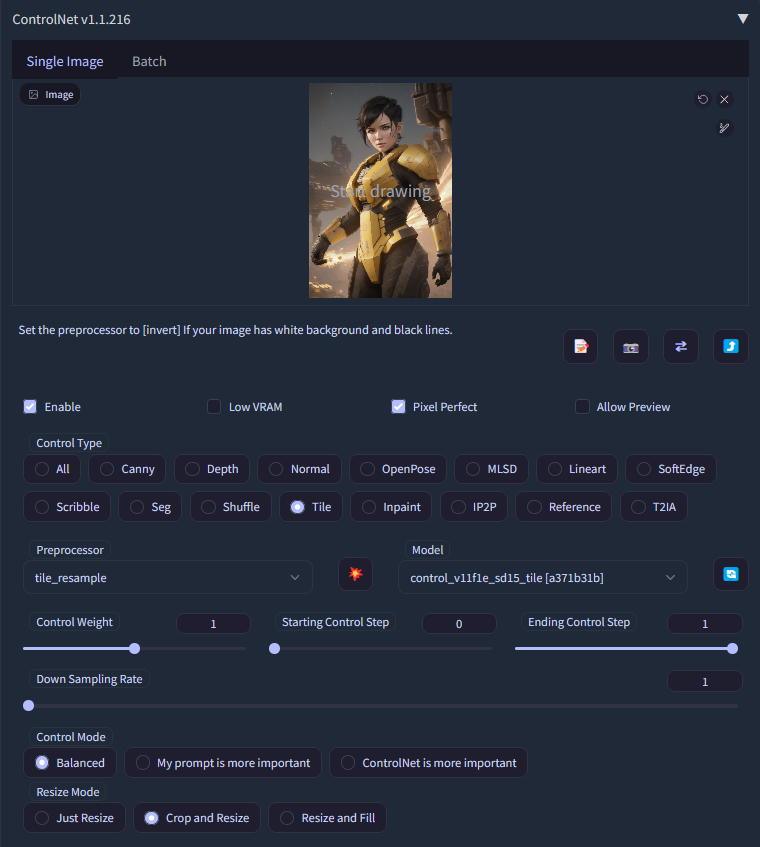

I also send the image to img2img. Set Sample Method to the same we used above. Maybe 26 Sampling Steps. Denoising Strength to maybe .56. Leave other settings alone for now and open up ControlNet.

Import the image into control net. Check Enable, and Pixel Perfect. Click the Tile preset. We're done here.

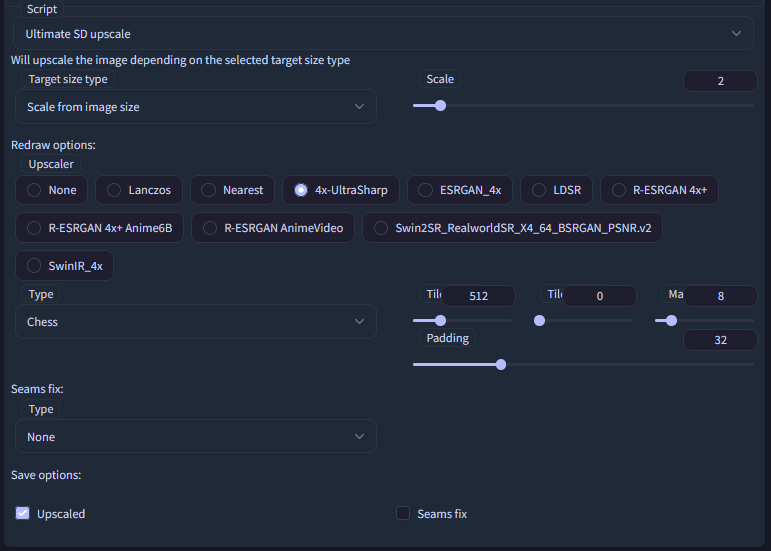

Now I use the Ultimate SD upscale Script. I set Target Size Type to "Scale from image size", setting the scale to 2, 2.5 or 3, depending on how big the source image was. If it was a smaller image, I'd go bigger with the upscale. It really depends.

Now set the Upscaler to 4x-Ultrasharp, Type Chess.

Everything should be good to go. Hit the Generate Button above and let the first Upscale commense.

Once you have a clean result, send it to Extras for the final upscale. I set Resize to 2, and Upscaler 1 to R-ESRGAN 4x+ for the best result. There are a few other cool upscalers, but I like this one most of the time. Hit Generate to get the final iamge.

Done! You can download the huge image and use it. Of course, it'll have flaws. AI Art is hard to get perfect without some manual edits. I will say that the PNG file will be huge, so it's best to convert it to a reasonable quality JPEG.

Getting it just right

It takes a lot of time to get the perfect result. In the image above, I'd have spent more time trying to clean up the purple blotches around the hair, and I'd have enerated several different variants with slightly different parameters to see if I could fine-tune the result to look the way I want. Overall, it's a cool image that captures what I was looking for.

Thanks for reading, and check out my new AI Art Portfolio website at aiart.fifthdread.com