Quick update on Fifthdread Services

A week ago, I was devastated. I accidentally cloned a drive to the wrong destination, and as a result, I lost all my HyperV VMs. I’ve since spent the past week rebuilding everything from scratch, but this time, I was going to do things a lot cleaner, smarter, and just overall better. In this blog post, I’ll go over my new server build, what software I have running, and some other cool things that I did along the way.

It should really be labeled “PROXMOX / NAS / DOCKER”

First, I started with a new Server. I already had plans to build a new NAS machine, but what I hadn’t planned on was also using it to house all my services. The truth is, it’s a great machine for the job. Using my old gaming PC’s hardware, it would be very capable. Let’s actually run down the hardware that the new server has…

- AMD 5950x 16 core 32 thread CPU

- 64GB ECC Memory

- 2x 2TB Samsung 980 PRO NVME SSDs

- GTX 1080 GPU

So not a bad setup for a server. I decided to take data integrity very seriously with this build, which is why I bought ECC memory for it. And I also decided to run two 2TB SSDs in Raid 1. This is achieved by setting up a ZFS mirror between the two drives. This gives us drive redundancy which is super nice.

Everything is in place with the exception of the actual NAS portion of the build, which will be deployed once I get the 12 drives in place.

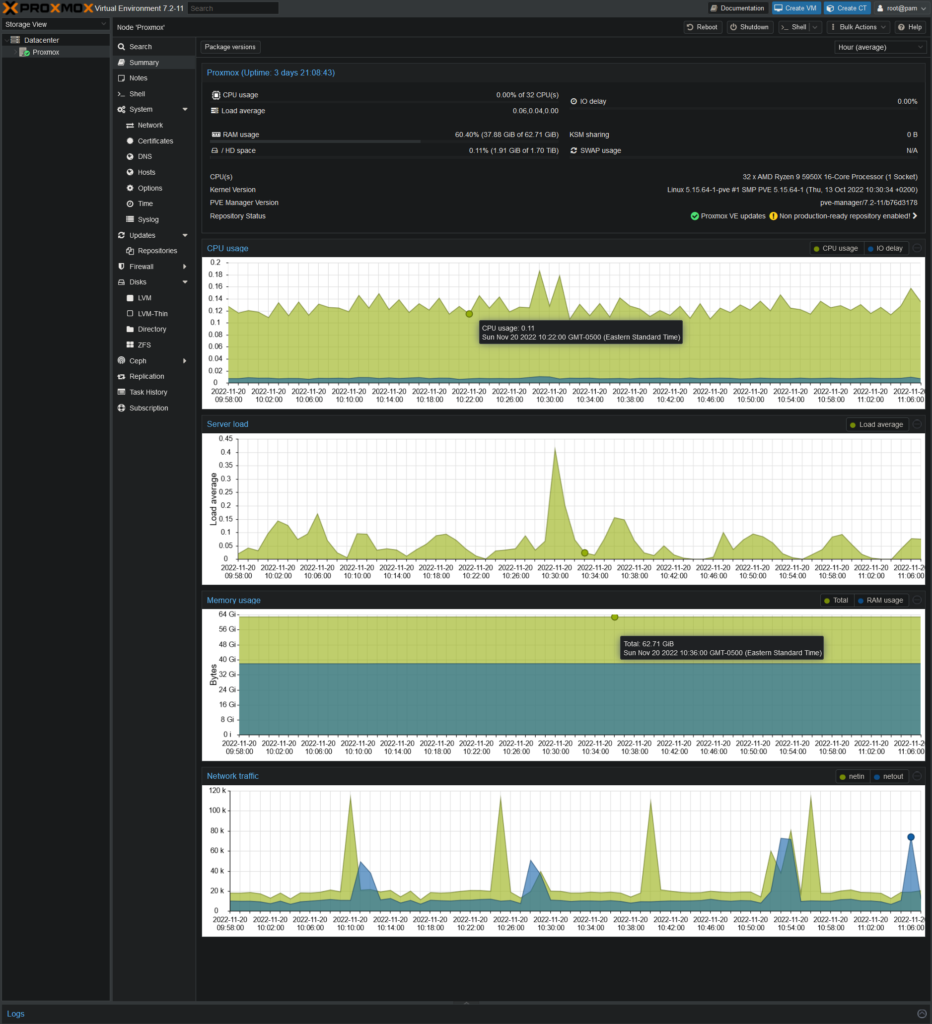

Now that we have the hardware out of the way, let’s talk about the software. The first thing I did was install Proxmox. For those interested, here is how they describe it:

Proxmox VE is a complete open-source platform for enterprise virtualization. With the built-in web interface you can easily manage VMs and containers, software-defined storage and networking, high-availability clustering, and multiple out-of-the-box tools on a single solution. – Proxmox website

Proxmox runs on Linux and will be the starting point. It will enable me to create VMs and manage them, all with a very nice Web UI.

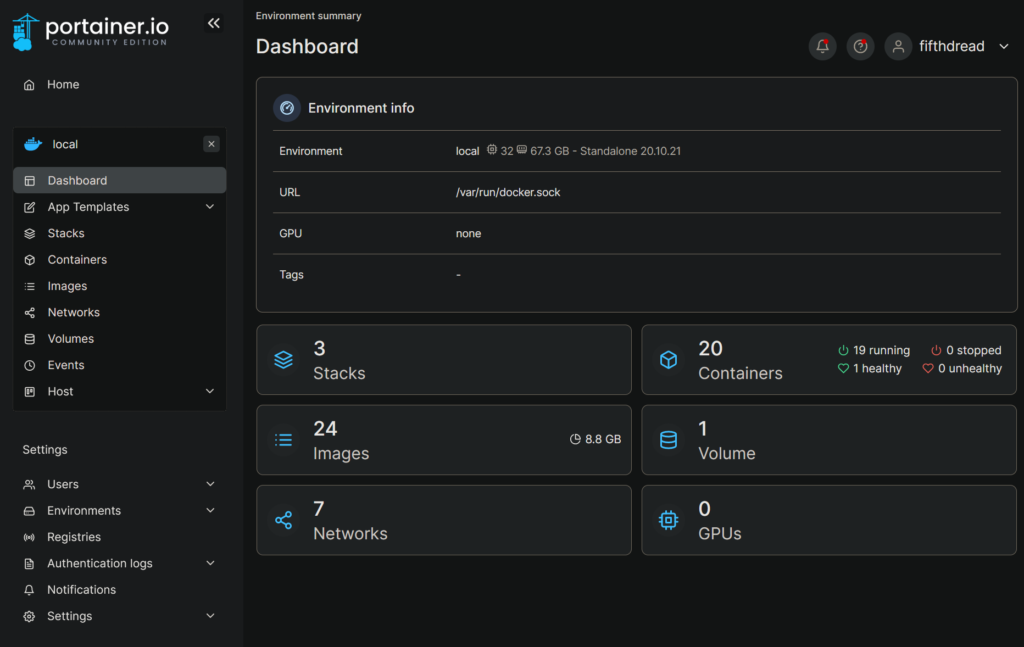

From here, I installed Docker with the help of my good friend Allyn, along with Portainer, a container designed to manage docker containers.

Now that we have Proxmox and Docker setup, the sky’s the limit. We can deploy VMs or Containers at will, and thanks to my buddy Allyn, I’m convinced that Containers are the way to go for 99% of things, so that’s why I currently have 20 Containers and 0 VMs. It’s light weight, and as a result, very efficient.

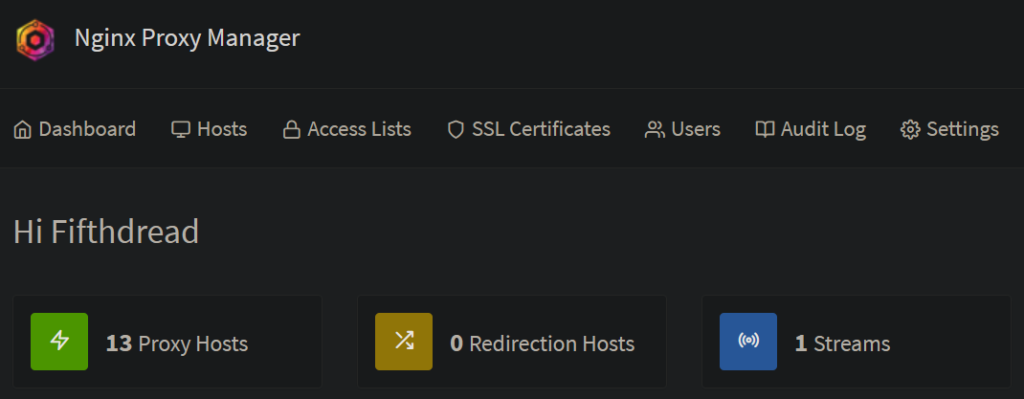

The next thing is to setup something I only had HALF setup before, and that’s a reverse proxy. After a little research, I decided to go with NGINX, but more specifically, NGINX Proxy Manager.

This is one of the most exciting things for me regarding the new setup. With this, I can manage all my available web services, their SSL certificates, and their domain names. It’s very powerful. Previously, I had to deploy SSL certificates manually for about a dozen machines… Now, this handles SSL Certificate renewal automatically for every website I host. Also, previously, all my services were available using distinct unique ports, which I had to port-forward at the router. This was a pain, as anyone using Fifthdread Services had to remember which ports were for which service. We have DNS for a reason, and one of those reasons is that humans can’t memorize numbers as well as words. Now, all my services are available via a unique name, such as portal.server.fifthdread.com, or up.server.fifthdread.com.

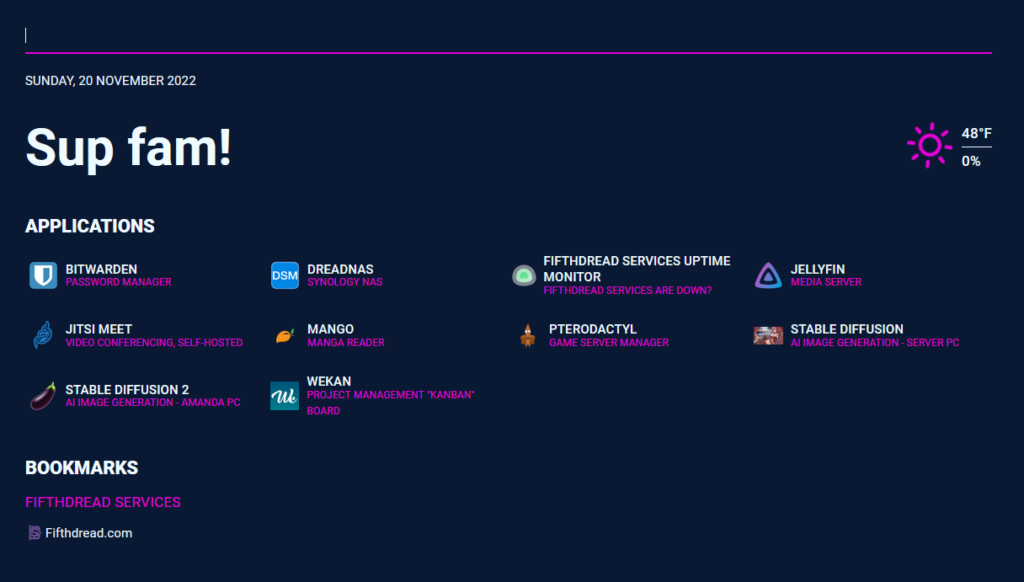

Now that I have so many services, I wanted an easy way to navigate between them. That’s where a “portal” page comes in. This page has a public facing portion (shown above) which is accessible by anyone. These are the services which I provide to my friends and family, and they can access via the portal. It gets even more convenient once I login to the page, as it shows me all my management links. It’s made getting around my stuff very easy, and I’m glad to have it.

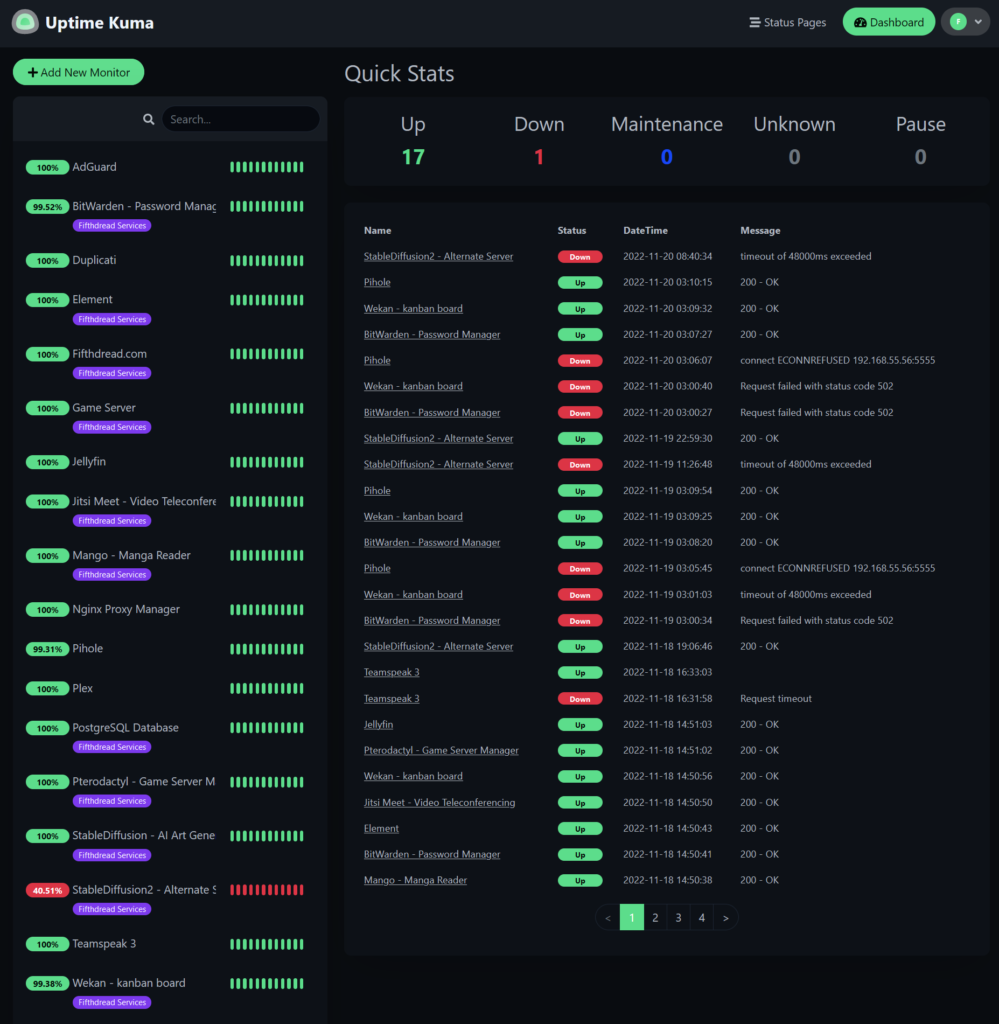

With all these services, I wanted a cool and easy way to monitor them. That’s where Uptime Kuma comes in. While I still need to configure it a little more, it’s been super effective at monitoring all my various services. I mean, just look at how cool it is? I have it set to send me email notifications whenever a service goes down, so I can fix the problem ASAP. I suppose the only real issue here is that Uptime Kuma runs in a docker container on the very box it’s monitoring. If the entire server went down for some reason, I wouldn’t get notified about that. I don’t think that will be much of a problem since the machine should be very stable.

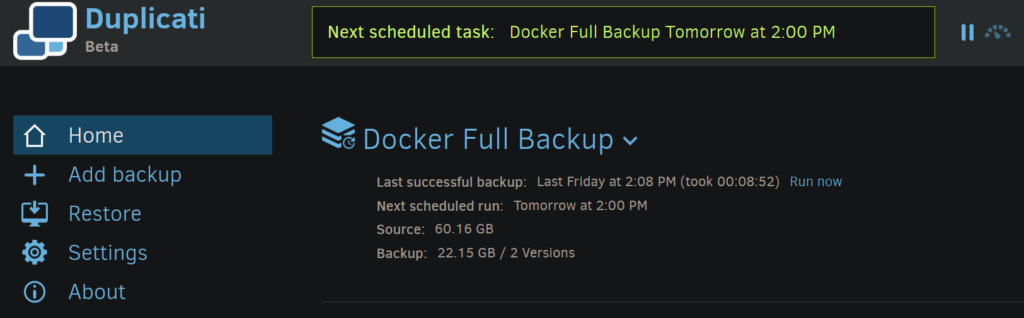

One of the “lessons learned” from destroying the old servers is that I really needed an automated backup solution. If I stayed on HyperV, I’d have used Veeam to backup my VMs. Since I have everything in Docker Containers now, I needed something a little more specific to this solution. That’s where Duplicati comes in. It’s configured to automatically backup my Docker data folders three times a week. Once I get my old NAS migrated over, I’ll start doing daily backups. I’m super pumped to finally have some real backups.

Everything is mostly back to normal

I’ve deployed Matrix Synapse aka Element, Jitsi Video Conferencing, Murmur (Mumble), Plex, Jellyfin, Wireguard, Adguard, Mango Manga Reader, and more. I’m finally mostly spun back up. I still need to deploy Snipe-IT, my inventory management system, and a few other things, but I’m proud of the current setup as it is. It’s super clean now, and not running on a Windows 10 Pro installation within HyperV, which is nothing but a good thing. I’ve made the old server almost completely irrelevant, and I’ll re-do that server after I get the New NAS online.

It’s been a struggle this past week, but ultimately, I’m glad that I crashed the server. It’s sort of funny to say, but I spent the last week learning a LOT about a ton of stuff, and I ended up with a setup which I’m proud of. It’s secure, it’s fast, it has backup and redundancy, it’s just overall fantastic. My server rack is awesome, and I just put some cool RGB lights in it to put the icing on the cake. Would I do it again? Hopefully not by force, but it did wonders for my home lab.